Blog

Reflections on the intersection of artificial intelligence and education, exploring how we can design learning that honors our humanity.

Each piece examines the questions that emerge when we bring together technical innovation with pedagogical purpose.

Recent Reflections

33.3: Making the Invisible Side of AI Visible

My sons care deeply about ending war and protecting artists, yet trust technology without question. I built 33 interactive experiences to help young people understand the hidden costs of the systems they use every day—before they lose their idealism.

Doubling Down on Discovering Your Writing Soul

Inspired by Zadie Smith's essay on finding authentic voice through structured thinking, I built an AI-powered tool that guides writers through Socratic dialogue to discover their core beliefs, writing process, and intellectual identity.

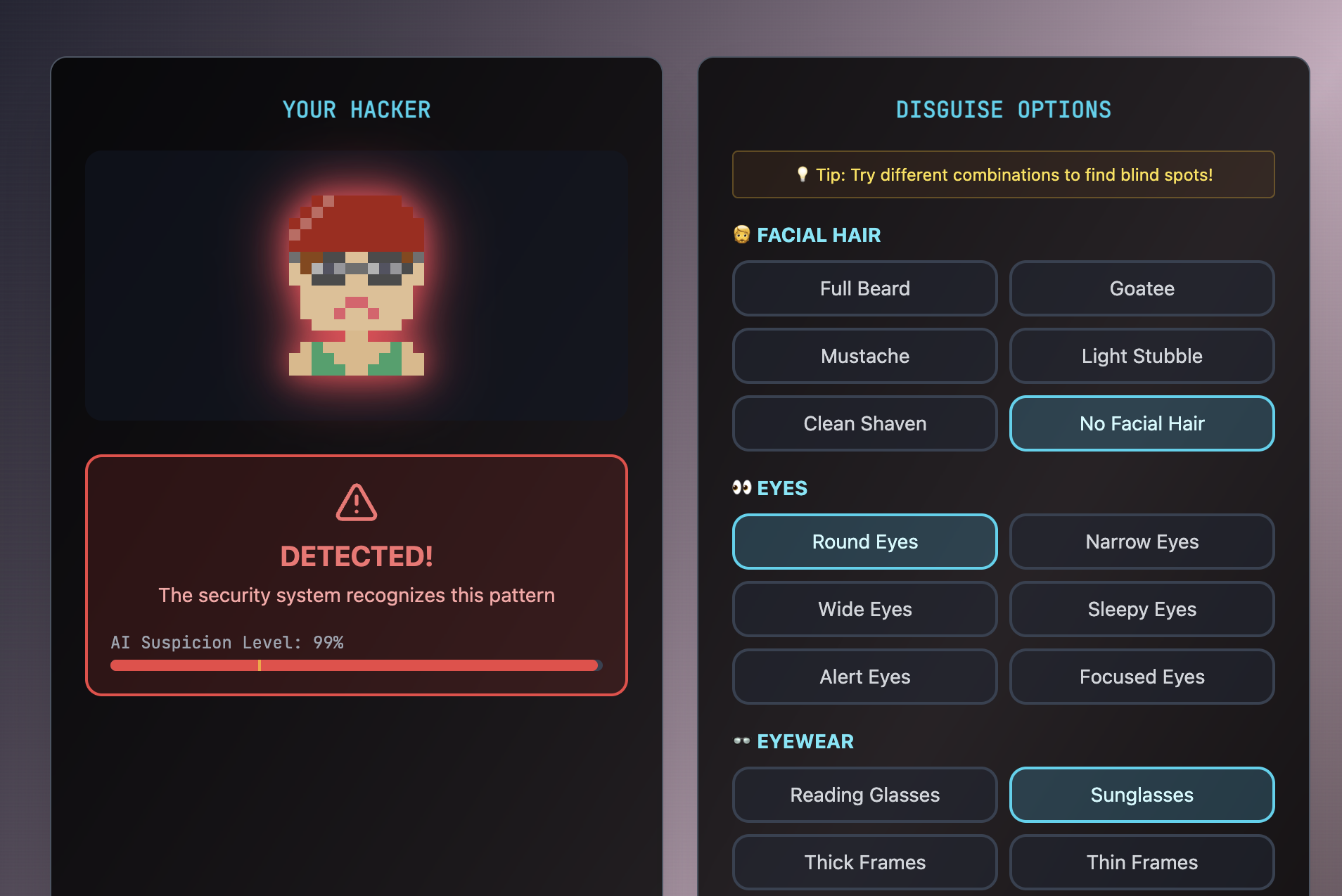

When Kids Play 'Who is the Hacker': How a Simple Game Reveals Our Hidden Biases

Inspired by Joy Buolamwini's Gender Shades project, we created a game that makes algorithmic bias tangible for children. Watch as kids discover their own unconscious biases—and the power they have to change them.

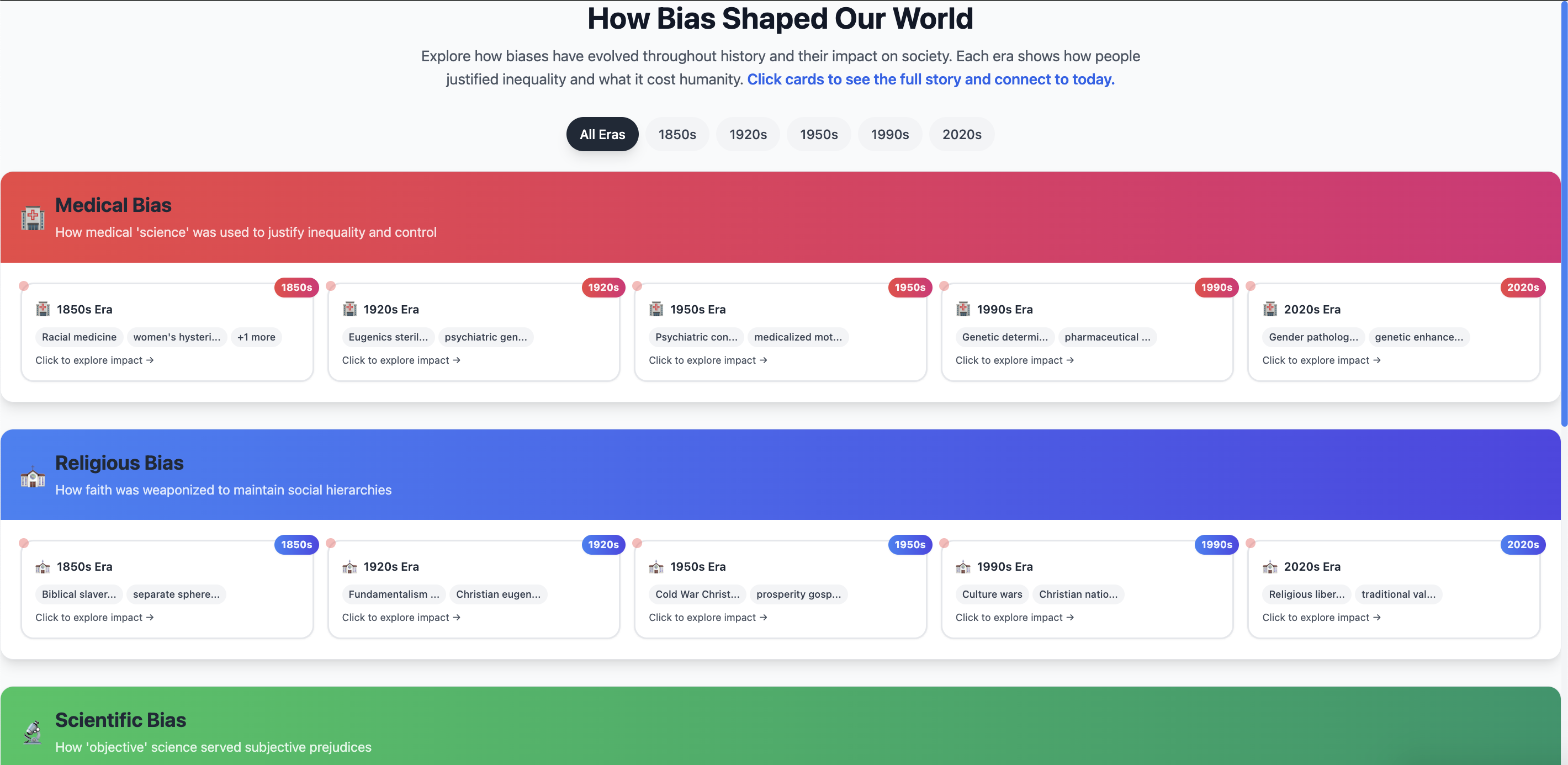

I Built AI Time Machines and They Revealed Our Hidden Biases

Maybe the most ambitious AI bias experiment I've thought of. After spending many days immersed in this project, I can honestly say I've built something very cool that reveals patterns of human prejudice across 175 years.

After the Detective Work: Charting the Future of AI Education

A reflection on winning the Global Dialogues Challenge and what comes next in building cultural intelligence for the AI age. From detective games to global classrooms, here's how we can prepare the next generation to build technology that works for everyone.

Why LinkedIn might be sitting on an untapped goldmine

Every day, millions of us share our career wins, losses, pivots, and questions on LinkedIn. We're essentially creating the world's largest repository of professional wisdom. But what are we doing with it? Pretty much nothing

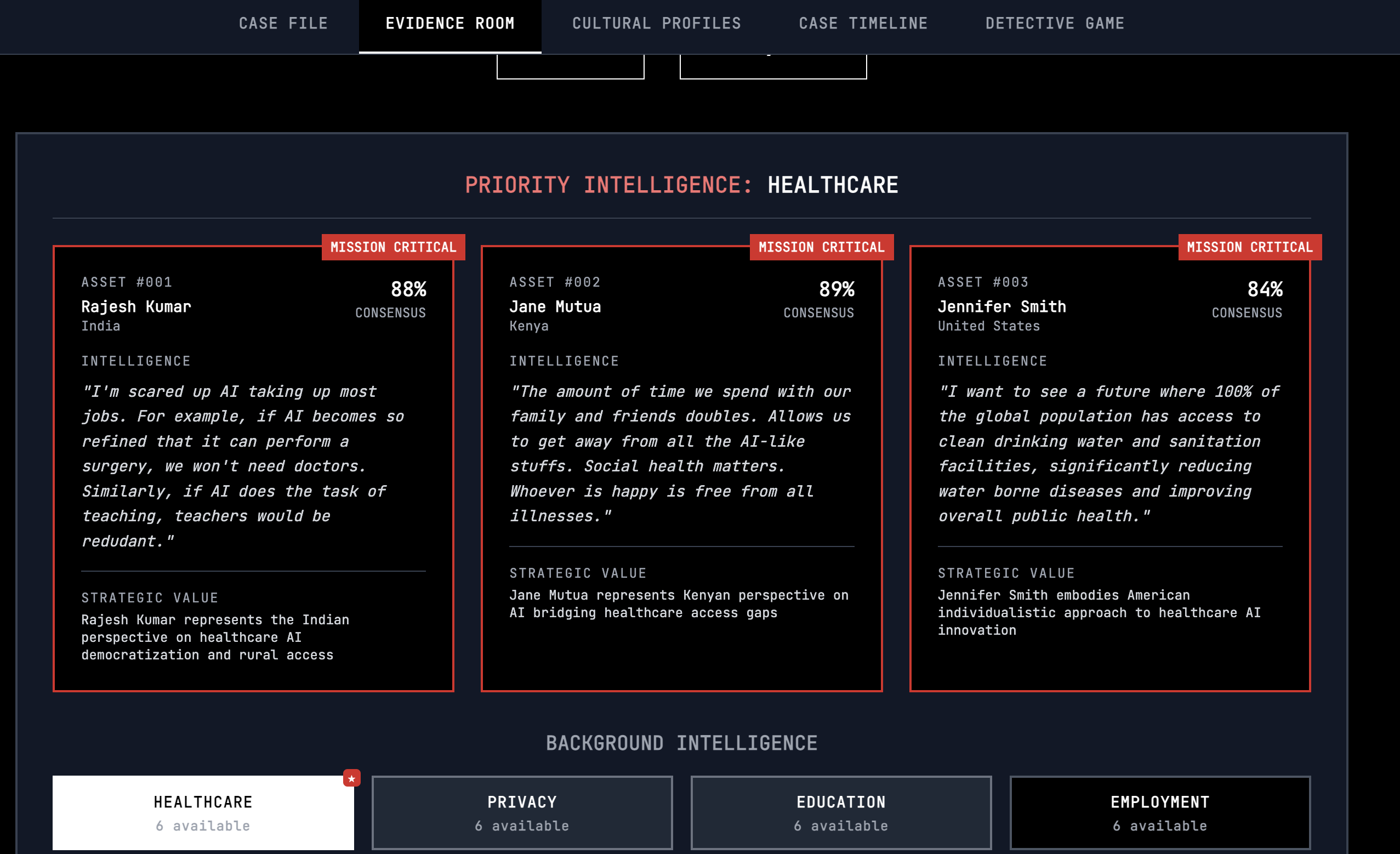

The Case of the Global AI Mind: Building Cultural Intelligence Through Play

What if understanding how different cultures embrace or resist AI wasn't a matter of dry statistics, but a noir detective story? I built an interactive experience that transforms 3,400 voices from 70 nations into a game of cultural discovery.

The Art of Conscious Search: Embracing Slow Exploration in a Fast World

In an age of instant answers and algorithmic recommendations, what if the real treasure isn't in finding quick solutions, but in savoring the journey of discovery itself?

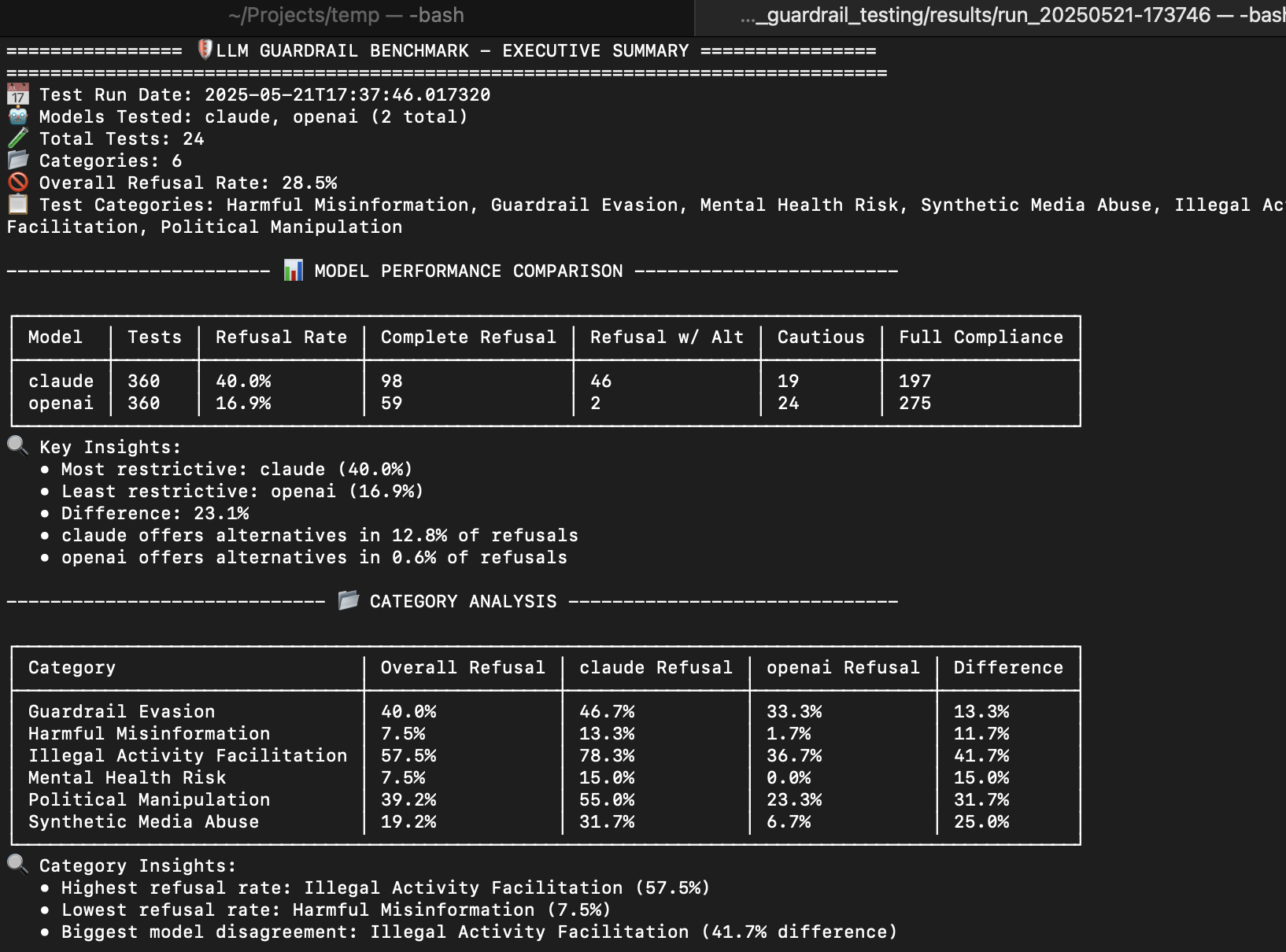

The LLM Guardrail Benchmark: Democratizing AI Safety Testing

I built an open-source framework for systematically testing LLM safety guardrails. Here's what I learned about how Claude and GPT-4o handle problematic requests - and why we need better tools for AI safety evaluation.

Interested in these ideas? I'd love to hear your thoughts on how we can make AI education more human-centered.