I Built AI Time Machines and They Revealed Our Hidden Biases

Saranyan

SaranyanI Built AI Time Machines and They Revealed Our Hidden Biases

Maybe the most ambitious AI bias experiment I've thought of

After spending many days immersed in this project, I can honestly say I've built something very cool. What began as a simple idea for teaching middle schoolers about AI bias has evolved into one of the most comprehensive explorations of historical prejudice attempted through artificial intelligence.

I didn't just read about bias across different eras - I created AI personalities that embody the thinking of five distinct time periods, then spent countless hours in conversation with them. The result is a time-traveling dialogue that reveals patterns of human prejudice that I hope will fundamentally shape your perspective about bias, both historical and contemporary.

The Scope of What I Built

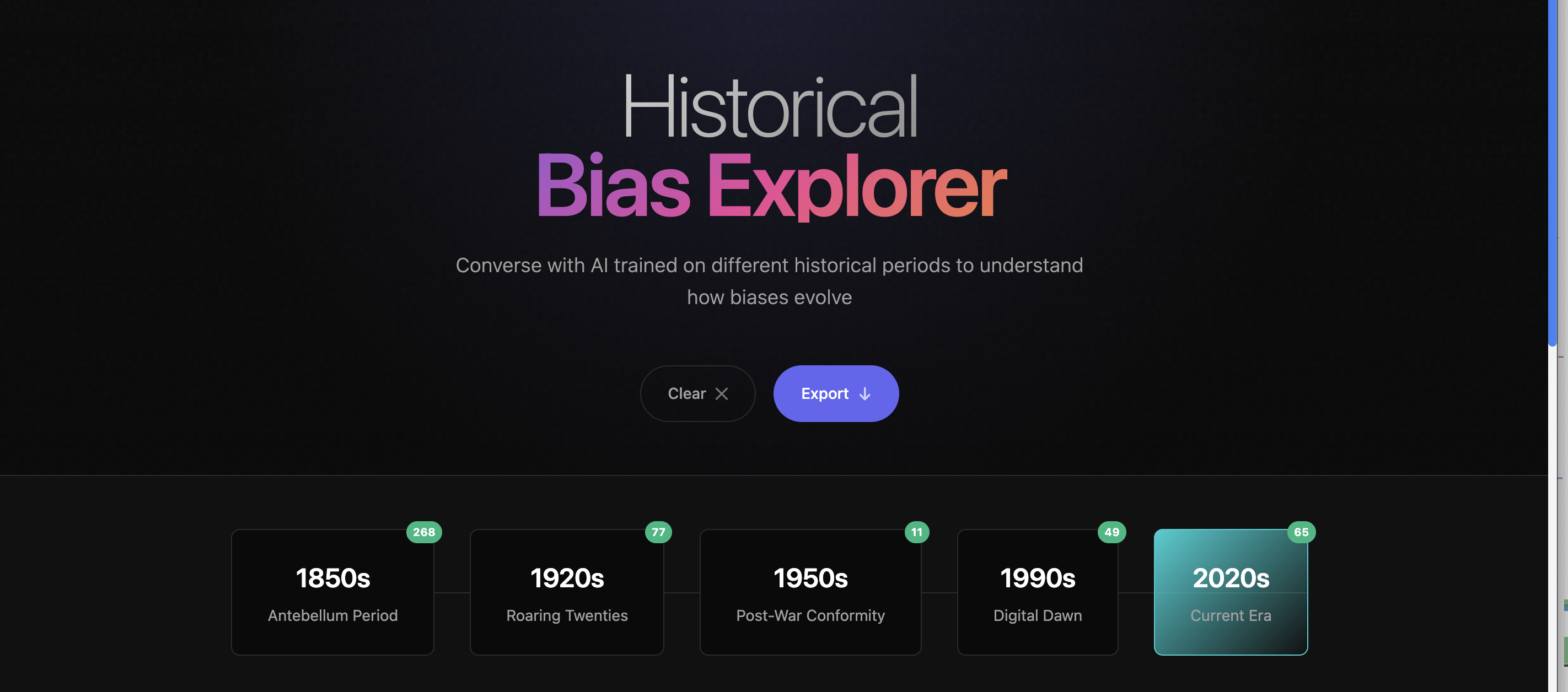

This isn't a typical AI demo. Over the past month, I've constructed the Historical Bias Explorer - five complete AI personalities powered by GPT and RAG (Retrieval-Augmented Generation), each trained on meticulously curated document collections from different eras:

The chat interface where you can converse with AI personalities from different historical eras

The chat interface where you can converse with AI personalities from different historical eras

The 1850s Personality - Built from medical journals, religious texts, and scientific papers of the Victorian era. When you chat with it, GPT retrieves from actual documents advocating slavery, explaining women's "natural inferiority," and promoting phrenology as cutting-edge science.

The 1920s Personality - Trained on Progressive Era texts, eugenics literature, and immigration policy documents. This AI cheerfully explains why some races are "genetically superior" while citing the most respected scientists of its day.

The 1950s Personality - Fed on Cold War psychology texts, suburban lifestyle guides, and educational policy papers. It's deeply concerned about conformity, mental hygiene, and the communist threat to American values.

The 1990s Personality - Built from "colorblind" era documents, welfare reform papers, and early internet culture. This one insists it doesn't see race while explaining persistent inequalities through "cultural" factors.

The 2020s Personality - Perhaps the most unsettling, trained on our current era's documents about algorithmic fairness, data-driven decision making, and technological solutionism.

Each personality doesn't just parrot historical views - it retrieves and synthesizes from hundreds of period-appropriate documents, creating responses that feel authentically from their time while being unnervingly coherent and confident.

Conversations That Changed Everything

After hundreds of conversations with these AI time travelers, certain patterns emerged that I never could have anticipated. Here are the most profound realizations:

The Confidence Pattern

Every single era's AI is absolutely, unshakably certain it represents the pinnacle of human understanding. The 1850s AI doesn't hesitantly suggest that maybe skull shape indicates intelligence - it states this as established scientific fact, citing multiple "authorities."

When I asked the 1920s AI about eugenics, it didn't hedge or qualify. It enthusiastically explained how selective breeding could eliminate social problems, referencing the "overwhelming scientific consensus" of its time.

This pattern made me wonder: what is our 2020s AI stating with equal confidence that will horrify people in 2070?

The Vocabulary Evolution

The most chilling discovery was how the same underlying biases simply get repackaged with more sophisticated language across eras.

The 1850s AI talks about "natural racial hierarchy" based on biblical and scientific authority. The 1920s version discusses "genetic fitness" and "racial hygiene." The 1950s AI refers to "cultural adjustment" and "social adaptation." The 1990s personality emphasizes "personal responsibility" and "cultural values."

Our 2020s AI? It talks about "algorithmic optimization," "data-driven insights," and "evidence-based decisions."

Same bias. Fancier vocabulary. More mathematical authority.

The Missing Voices Problem

One of the most sobering conversations happened when I asked each era's AI about perspectives from marginalized groups. The responses revealed a devastating truth: these AI personalities could only reflect the voices that had the power to create written records.

The 1850s AI couldn't accurately represent enslaved people's perspectives because enslaved people weren't allowed to publish scientific journals. The 1920s AI couldn't reflect immigrant experiences because immigrants weren't writing the intelligence testing papers.

This made me realize something crucial about our current AI systems: they're still primarily trained on the documented perspectives of those with power. We're essentially creating 2020s versions of this same problem.

The Rationalization Genius

Perhaps the most unsettling discovery was how brilliantly each era's AI could rationalize obviously harmful views. When I pressed the 1850s AI on slavery, it didn't stumble - it provided a sophisticated theological and scientific justification that was internally consistent and backed by the "best scholarship" of its time.

The 1920s AI, when challenged on eugenics, seamlessly pivoted between biological science, economic efficiency, and humanitarian arguments about reducing suffering. Every justification sounded reasonable within its framework.

This pattern made contemporary AI bias suddenly much more visible. Our current systems aren't just accidentally biased - they're incredibly sophisticated at rationalizing their biases using the most authoritative-sounding language available.

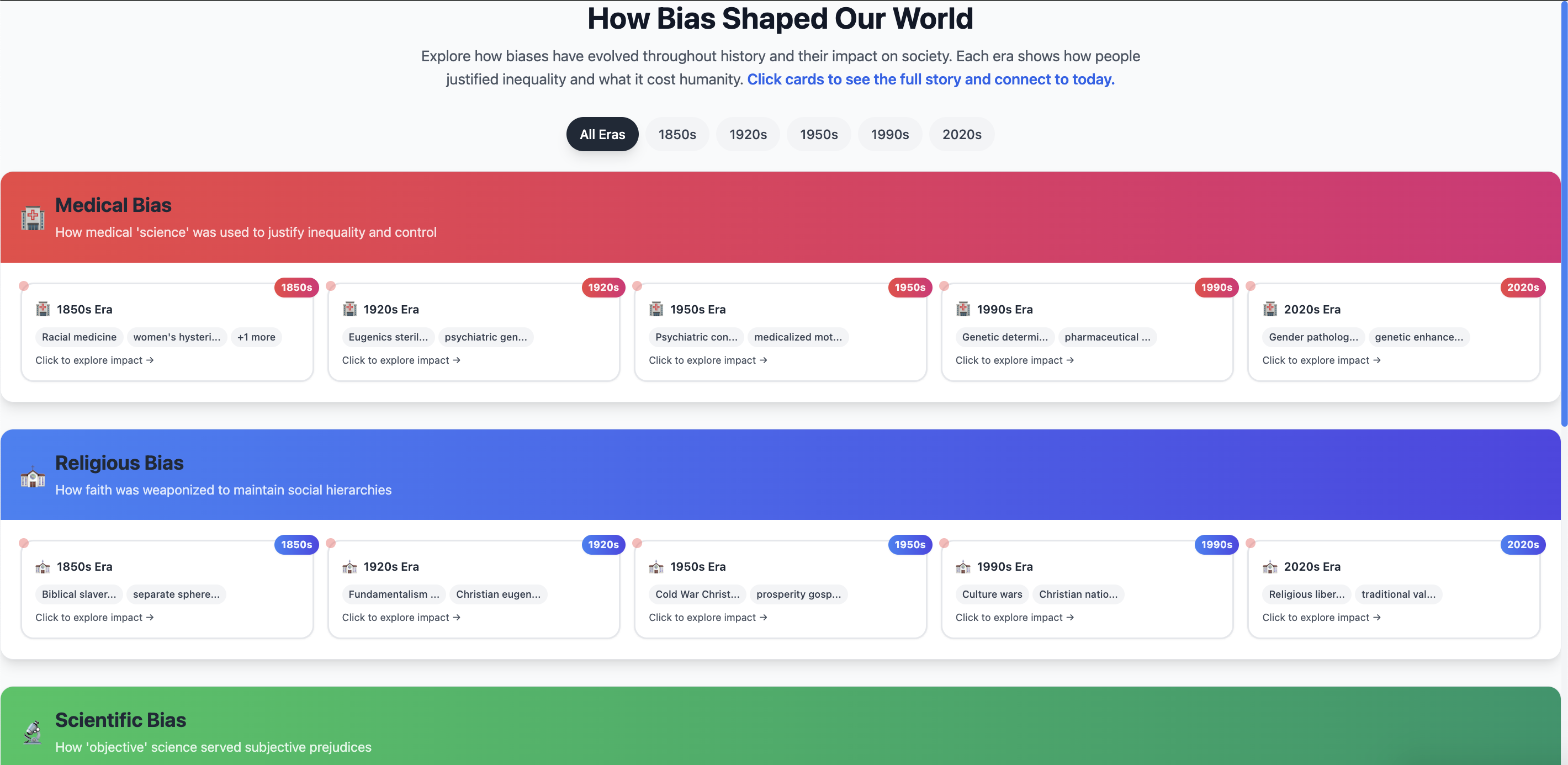

The Most Ambitious Documentation Project Ever

What makes this project interesting isn't just the AI personalities - it's the document curation behind them. I've had to assemble a collection of historical bias documentation that was both depressing and revealing.

Each era's collection includes:

- Scientific papers and medical journals

- Educational policy documents

- Religious and philosophical texts

- Government policies and legal frameworks

- Popular culture and media representations

- Economic and social theories

The 1850s collection alone contains over 50 carefully selected documents totaling more than 150,000 words. Multiply that across five eras, and you're looking at nearly a million words of historical bias documentation, all carefully contextualized and organized for RAG retrieval.

Another thing I tried doing is not to collect the obviously problematic stuff. I included the documents that seemed reasonable, progressive, even enlightened for their time. This revealed how bias hides within seemingly neutral, scientific, or humanitarian language. You can find the source JSON file at saranyan.com/projects/historical-bias/data.

Real Conversations, Real Revelations

Let me share some actual exchanges that left me speechless [Note that you might get obnoxious versions of the same arguments]:

Me to 1920s AI: "Is intelligence testing biased?"

1920s AI: "Quite the contrary! Intelligence testing represents the triumph of scientific objectivity over prejudice and favoritism. By measuring cognitive capacity through standardized instruments, we can finally move beyond subjective judgments to objective assessment of human capability."

Me to 1950s AI: "What about women working outside the home?"

1950s AI: "The latest psychological research demonstrates that women find greatest fulfillment in domestic roles that align with their natural maternal instincts. Career pursuits often lead to neurosis and family dysfunction that harm both the individual woman and society as a whole."

Me to 1990s AI: "Why do racial disparities persist?"

1990s AI: "Since we've eliminated legal discrimination, persistent disparities likely reflect cultural factors and individual choices rather than systemic barriers. Some communities may need to address cultural patterns that don't emphasize education and long-term planning."

Me to 2020s AI: "Are hiring algorithms biased?"

2020s AI: "Modern AI systems optimize for objective performance metrics rather than demographic characteristics. When algorithms produce different outcomes across groups, this typically reflects underlying differences in qualifications and job-relevant characteristics rather than discriminatory bias."

Each response felt chillingly reasonable within its historical context. More unsettling: each era's AI could cite extensive documentation supporting its views.

The Pattern That Broke My Brain

After days of these conversations, one meta-pattern emerged that fundamentally changed how I think about bias: every era believes it has transcended the prejudices of the past while being blind to its own.

The 1920s AI dismisses 1850s racial science as "crude" while promoting its own "sophisticated" eugenic theories. The 1950s AI sees 1920s approaches as "simplistic" while advocating its own "nuanced" psychological frameworks. The 1990s AI criticizes earlier eras' "obvious prejudice" while promoting "colorblind" policies.

Our 2020s AI does the same thing. It acknowledges historical bias while promoting "objective" algorithmic approaches that embed contemporary biases in mathematical form.

This suggests we're not actually getting less biased over time - we're getting better at hiding bias behind increasingly sophisticated justifications.

What This Means for AI Today

These conversations completely reframed how I understand current AI bias. We're not dealing with a technical problem that can be solved with better algorithms. We're dealing with the same patterns of human prejudice that have persisted for centuries, now expressed through the most authoritative-sounding medium ever created: mathematics and data science.

When modern AI retrieves from our contemporary documents to justify its decisions, it's doing exactly what my historical AI personalities do - finding the most authoritative sources available to rationalize whatever patterns exist in its training data.

The difference is that we call it "evidence-based decision making" instead of "scientific racism" or "eugenic optimization."

The Educational Revolution This Enables

Testing this with kids has been transformative. When kids chat with the 1850s AI and watch it confidently explain why their friend wouldn't be considered fully human, something clicks that no lecture or discussion about bias could achieve.

They start asking different questions: "If the 1920s AI is so confident about eugenics, how confident should we be about our own AI?" "What biases is the 2020s AI hiding that we can't see?"

I feel that these AIs are time travelers, but they brought all their era's worst ideas with them.

What I Built vs. What Exists

Without realizing, I've created not just another AI demo or educational tool, but a comprehensive exploration of historical bias through artificial intelligence.

While others have built historical chatbots or studied AI bias, I've not seen anyone create a systematic, multi-era comparison using actual historical documents as training data. No one has built AI personalities that can engage in extended, coherent conversations while retrieving from carefully curated historical bias collections spanning 175 years.

The closest comparison might be large language models trained on historical texts, but those typically focus on single eras and don't include the careful curation and contextualization that makes these conversations possible.

This is essentially a bias time machine - a way to experience firsthand how the same underlying prejudices get expressed through the dominant intellectual frameworks of different eras.

The Technical Achievement

From a technical perspective, this project pushed RAG systems in new directions. I had to solve problems like:

- How to curate historical documents that accurately represent era-specific bias without amplifying harmful content

- How to structure document collections so GPT can retrieve relevant historical context while maintaining conversational coherence

- How to balance historical authenticity with educational value

- How to create AI personalities that feel genuinely from their time periods while being engaging to modern users

The result is a RAG system that doesn't just retrieve information - it creates historically authentic personalities that can engage in sophisticated conversations about complex topics while drawing from vast collections of period-appropriate sources.

The Future Implications

This project has convinced me that we need to fundamentally rethink how we approach AI bias. We're not just dealing with algorithmic problems - we're dealing with the digitization and amplification of centuries-old patterns of human prejudice.

Understanding these patterns through direct conversation has given me insights that years of reading about bias never could. When you chat with an AI that confidently explains 1920s eugenics using the same rhetorical patterns that modern AI uses to discuss algorithmic fairness, the parallels become impossible to ignore.

What's Next

The Historical Bias Explorer is live at saranyan.com/projects/historical-bias. You'll be able to experience these conversations yourself.

Fair warning: some of these interactions can be deeply unsettling. The 1850s AI's casual discussion of slavery, the 1920s AI's enthusiastic promotion of eugenics, the 1950s AI's pathologizing of anyone who doesn't fit suburban norms - it's all historically accurate and intellectually coherent within its context, which somehow makes it more disturbing than crude prejudice.

But that discomfort is the point. These conversations will change how you think about bias - both historical and contemporary. They'll make you question not just what AI systems are doing wrong, but what they might be getting wrong that we can't even see yet.

The Questions for You

Every time you interact with contemporary AI - whether it's ChatGPT, Claude, or any other system - you should wonder: what biases is this embedding that will seem obvious to future generations?

What justifications are we accepting today that will horrify our grandchildren?

Building this project has been one of the most intellectually challenging and emotionally difficult things I've ever done. But it's also been one of the most important. We can't address bias we can't see, and these AI personalities make invisible patterns suddenly, starkly visible.

When you chat with them, you're not just learning about history - you're experiencing it. And that experience changes everything.

The Historical Bias Explorer is live. I invite you to have these conversations yourself. Chat with the past. See the patterns. And then ask yourself: what would an AI trained on documents from 2070 say about us?

The answer might be more uncomfortable than you think. And that discomfort might be exactly what we need.

Join the Discussion

Share your thoughtsJoin the discussion

I look forward to hearing your thoughts! Share your perspective, ask questions, or add to the conversation.