The Case of the Global AI Mind: Building Cultural Intelligence Through Play

Saranyan

SaranyanThe year is 2025. You're an agent recruited by a shadowy organization. Your mission: decode humanity's relationship with artificial intelligence. Your tools: 3,400 testimonies from 70 nations. Your challenge: understanding not just what people think about AI, but why cultures dream—or nightmare—so differently about our algorithmic future.

This is how I chose to present the Global Dialogue on AI data. Not as charts and graphs, but as a film noir investigation where you, the player, become a cultural detective piecing together humanity's complex relationship with artificial intelligence.

Why Turn Data Into Detective Fiction?

When the Global Dialogues Challenge asked to make sense of thousands of responses about AI consciousness, I debated if I should build another dashboard. Dashboards are always safe. Also forgettable. But at the end of the day, for me, this was an opportunity to have a conversation about the changing world with my children. So, I tried something different that taps into what this data really represents: the hopes, fears, and dreams of people grappling with the most transformative technology of our time. An exploratory game.

I chose noir because cultural understanding is detective work. It's about following hunches, uncovering patterns, and most importantly—recognizing that every data point is a human story.

The Architecture of Understanding

My hope is that this project:AI Cultural Intelligence Agency isn't just themed entertainment. It becomes a designed system for cultural discovery. This is how it works:

Your Psychological Profile Becomes Your Lens

Before investigating, you complete a psychological assessment mapping your values across four dimensions:

- Community Focus vs. Individual Priority

- Technology Optimism vs. Cautious Skepticism

- Authority Trust vs. Independence

- Privacy Concern vs. Openness

The system then assigns you a "Cultural Twin"—a country whose values mirror yours. This isn't random pairing; it's based on actual patterns in the data. If you value community and trust authority, you might be matched with India. Prize privacy and individual rights? Germany becomes your cultural liaison.

This matching serves a deeper purpose: we understand others through the lens of ourselves. By making this explicit, the experience asks you to acknowledge your biases before interpreting global patterns.

Evidence as Story, Not Statistics

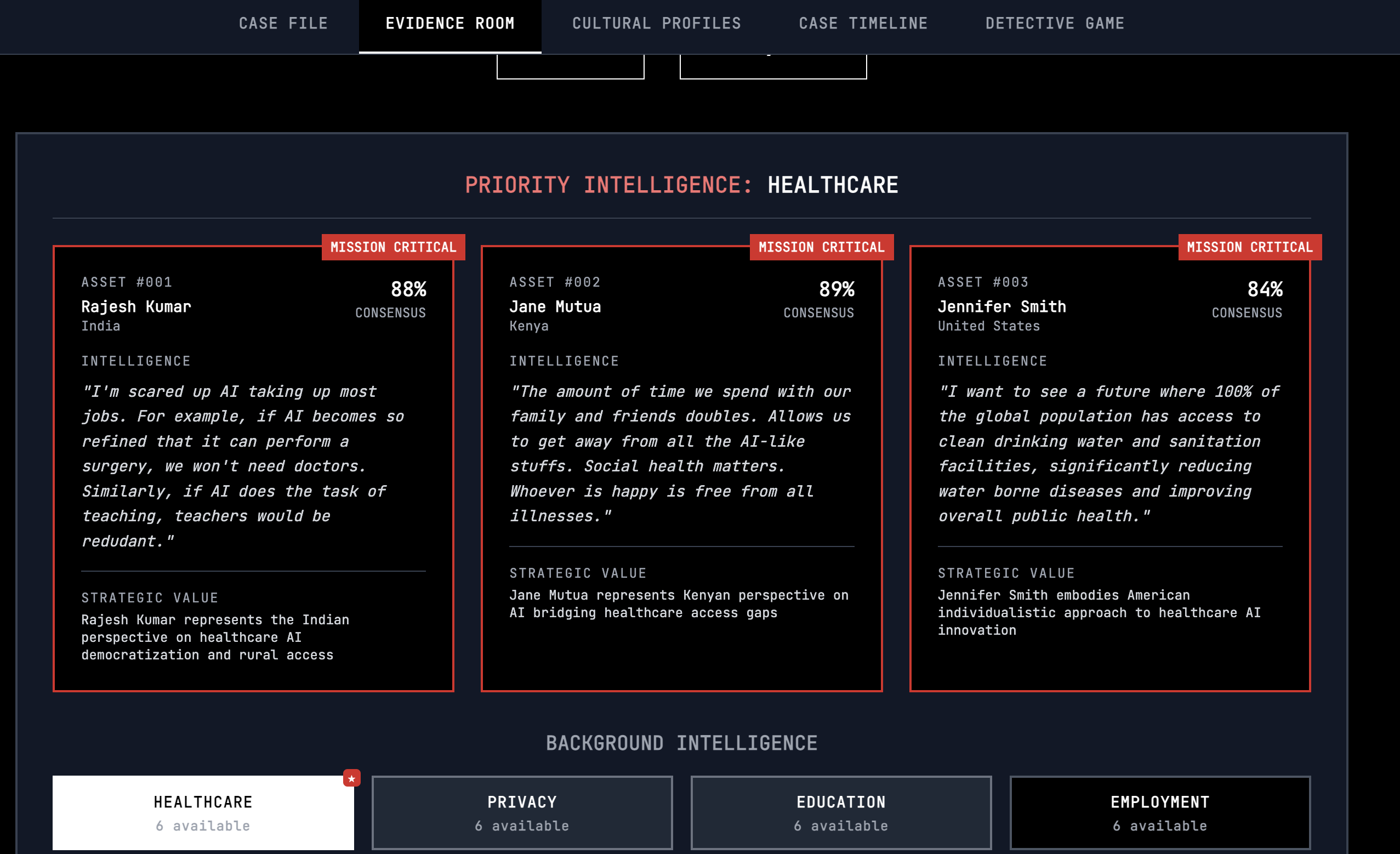

In the Evidence Room, you don't see bar charts. You encounter voices:

"AI diagnosis tools could revolutionize healthcare in underserved communities. In my village, we have one doctor for 10,000 people. AI isn't replacing human care—it's providing care where none exists." — Rural Healthcare Coordinator, India (91% local agreement)

"We learned from history that surveillance technology, once implemented, is never voluntarily abandoned. Every convenience comes with a cost to freedom." — Data Protection Officer, Germany (87% local agreement)

Each piece of "evidence" can be filed, building your case file. As you file evidence, new intelligence emerges, simulating how understanding deepens through engagement, not passive consumption.

Missions That Matter

Rather than exploring data aimlessly, you're assigned missions that mirror real policy challenges:

Operation Skywatch: Draft drone surveillance policy balancing Western privacy demands with Eastern security priorities

Operation Lifeline: Convince skeptical populations about healthcare AI benefits

Operation Guardian: Design privacy frameworks that work across individualist and collectivist cultures

Operation Workforce: Address automation fears with cultural sensitivity

Each mission emphasizes different data patterns, teaching players that the same AI technology lands differently depending on cultural soil.

What The Data Taught Me Through Design

Building this experience revealed patterns I might have missed in traditional analysis:

The Healthcare Exception

Healthcare AI shows near-universal acceptance (89% global average). Why? Because illness is humanity's common enemy. Cultural differences fade when the alternative is suffering. The rural Indian coordinator wasn't speaking of abstract benefits—she was talking about lives saved.

The Privacy Paradox

The East-West divide on privacy (23% vs 87% concern) isn't about technological sophistication. It's about historical memory. Germans remember the Stasi. Americans fear corporate surveillance. Chinese citizens prioritize collective security. Each stance is rational within its cultural context.

The Pragmatism of Development

Developing nations show highest AI optimism not from naivety, but from pragmatism. When you lack infrastructure, AI represents leapfrogging—mobile payments without banks, telemedicine without hospitals. The technology offers what geography denied.

Trust Transfers

The most fascinating pattern: people who trust their doctors trust medical AI. Trust isn't built in technology—it transfers from human relationships. This suggests AI adoption strategies should strengthen, not bypass, existing trust networks.

Teaching Tomorrow's Minds Today

But here's what truly excites me about this project: its potential as an educational tool. Imagine a 12-year-old in Mumbai playing detective alongside a 12-year-old in Munich. Both investigating the same "case," but seeing it through their cultural lenses. Both learning that their perspective is valid—and incomplete.

We desperately need to start AI education early, but not with technical jargon about neural networks. We need to begin with the human questions: How do different people feel about AI? Why might your friend from another culture see privacy differently? What shapes our comfort with technology?

The Curriculum Hidden in Play

Children are natural detectives. They ask "why" relentlessly. This project channels that curiosity toward cultural understanding:

The Bias Mirror: When young players complete their psychological profile and receive their "Cultural Twin," they're learning that we all have biases—and that's okay. The key is recognizing them. A child from Germany might discover they're matched with Japan and wonder: "Why do I care so much about privacy when my Japanese twin doesn't?" That's the beginning of wisdom.

Evidence as Empathy Training: Each piece of evidence in the game represents a real person's perspective. When a young player reads:

"In my grandmother's time, a fever could kill. Now AI helps our one doctor save whole villages. This is not about technology—it's about life." — Kenya

They're not learning statistics. They're learning to see through another's eyes. They're understanding that technology means different things when your nearest hospital is 200 kilometers away versus 2 blocks.

The Complexity Practice: The missions force players to hold multiple truths simultaneously. Yes, facial recognition can help find missing children. Yes, it can enable surveillance states. Both are true. Children who learn to navigate these complexities through play become adults who can handle nuance.

Why This Matters Now

Our children will inherit an AI-saturated world. They'll make decisions about autonomous vehicles, AI teachers, algorithmic healthcare, and technologies we can't yet imagine. If their only AI education is technical, we've failed them. They need cultural intelligence as much as computational thinking.

Consider what this data reveals:

- Young people in India see AI as liberation from limitations

- Youth in Germany see it as a potential threat to freedom

- American teens worry about job displacement

- Chinese students view AI as national pride

These aren't right or wrong views—they're different stories shaped by different histories. Children who understand this through play become adults who build better systems.

The Creative Imperative

We cannot teach AI ethics through worksheets. We cannot explain algorithmic bias through lectures. These concepts live in the realm of human experience, and that's where we must meet them—through story, through play, through stepping into another's shoes.

The noir detective format works because children understand investigation. They know the thrill of uncovering clues, the satisfaction of solving mysteries. But here, the mystery isn't "whodunit"—it's "why do we think what we think?"

This is what creative AI education looks like:

- Cultural detective agencies in classrooms where students investigate global AI attitudes

- Bias mapping exercises where children discover their own assumptions

- Global pen pal programs focused on sharing AI experiences across cultures

- Student-created missions based on their community's real AI challenges

The Data Gold Mine for Educators

This Global Dialogue data is a fantastioc educational material. It's not synthetic scenarios—it's 3,400 real voices expressing real concerns. Teachers could:

Create Cultural Exchange Units: Pair classrooms from countries with opposing AI views. Have students investigate why their perspectives differ. Let them discover that a German student's privacy concern and an Indian student's healthcare hope both come from love of community.

Design Empathy Exercises: "You're a farmer in Kenya. Drought threatens your crops. How does AI-powered weather prediction feel to you?" "You're a factory worker in Detroit. Automation just arrived. What are your fears?"

Build Critical Thinking Through Roleplay: Students adopt different cultural perspectives and debate AI policies. They learn there's no universal solution because there's no universal culture.

Beyond Cool: The Moral Urgency

Yes, I built something interactive. Yes, it has a noir aesthetic. But that's not the point. The point is that we're raising a generation who will either weaponize cultural differences or bridge them. Who will either build AI that serves some or AI that serves all.

When a child in São Paulo understands why a child in Stockholm fears surveillance, they're less likely to build systems that inadvertently harm. When a student in Beijing appreciates why their peer in Boston values individual privacy, they're more likely to design technology that respects both collective good and personal autonomy.

This isn't about making data fun. It's about making wisdom accessible. It's about teaching children that before we ask "can AI do this?" we must ask "should it?" and "for whom?" and "at what cost?"

The Creative Challenge to Educators

I challenge educators to steal this approach. Take this data. Build your own investigations. Create mystery games where students uncover why their community fears or loves AI. Design cultural exchange programs around AI attitudes. Make understanding differences as engaging as TikTok.

Because here's the truth: if we don't teach children to navigate cultural differences around AI creatively and empathetically, they'll learn about them through conflict and division. If we don't show them that multiple perspectives can coexist, they'll inherit a world of technological tribalism.

The noir aesthetic isn't childish—children love mysteries. The detective framework isn't dumbing down—it's meeting young minds where they naturally excel. The cultural matching isn't superficial—it's teaching children that understanding starts with knowing yourself.

What Players Discover

Through gameplay, patterns emerge that statistics alone couldn't convey:

Cultural Evolution: Comparing early 2024 data with late 2024 responses shows how quickly attitudes shift. Direct experience with AI consistently reduces fear—but only when that experience is positive.

Mission Complexity: Players quickly learn there's no universal solution. Drone policies that work in Singapore fail in San Francisco. Healthcare AI messaging that resonates in Kenya falls flat in Frankfurt.

The Human Constant: Across all cultures, one pattern holds: AI acceptance correlates with perceived benefit to community. Whether that community is family (India), society (China), or individual freedom (USA) varies—but the human need to protect what we value remains constant.

An Invitation to Investigation

The AI Cultural Intelligence Agency is live and waiting for new agents. Whether you're a policymaker seeking cultural insights, a technologist wanting to understand global users, or simply someone curious about how the world sees AI differently, your mission awaits.

But fair warning: like any good noir, this investigation might change how you see things. You might discover your own biases. You might find wisdom in worldviews you've dismissed. You might realize that building beneficial AI isn't just a technical challenge—it's the most complex cultural project humanity has undertaken.

In the end, that's what this project is really about. Not just visualizing data, but building empathy. Not just understanding statistics, but honoring stories. Not just advancing technology, but ensuring it advances us all.

Ready to begin your investigation? The AI Cultural Intelligence Agency is recruiting agents. Your psychological profile awaits. Your cultural twin is searching for you. The global AI consciousness needs decoding.

And remember: in this investigation, the real discovery isn't what others believe about AI—it's understanding why they believe it.

Most importantly: Bring your children. Let them play detective. Let them discover that the future of AI isn't just about algorithms—it's about understanding each other.

Technical note: Built with React, powered by real Global Dialogue data, and designed for anyone who believes technology should bring us together, not drive us apart.

*Educational note: Teachers, youth group leaders, and parents—this is yours to use. Our children deserve better than fear-based AI education. They deserve wonder, complexity, and hope. I plan to clean the code and open source it. If you can't wait, ping me if are willing to deal with the hacked-up mess my code is right now :). *

Join the Discussion

Share your thoughtsJoin the discussion

I look forward to hearing your thoughts! Share your perspective, ask questions, or add to the conversation.