When Kids Play 'Who is the Hacker': How a Simple Game Reveals Our Hidden Biases

Saranyan

Saranyan"Why does the computer think everyone with a beanie is suspicious?" asked a 13-year-old, staring at the screen. In that moment, he understood algorithmic bias better than many adults ever will.

The Birth of a Bias-Teaching Game

When I first watched Joy Buolamwini's Gender Shades project unveiling how facial recognition systems failed on darker-skinned faces, I knew this was a conversation we needed to have with the next generation. But how do you explain algorithmic bias to children? How do you make visible the invisible prejudices that shape our AI systems?

The answer came through play.

From MIT Research to Middle School Classrooms

The Gender Shades project exposed a fundamental truth: AI systems inherit our biases. Commercial facial recognition systems showed error rates of up to 34.7% for dark-skinned women while achieving near-perfect accuracy for light-skinned men. This wasn't a bug—it was a reflection of who was in the room when these systems were built, whose faces were in the training data, and whose experiences were considered "default."

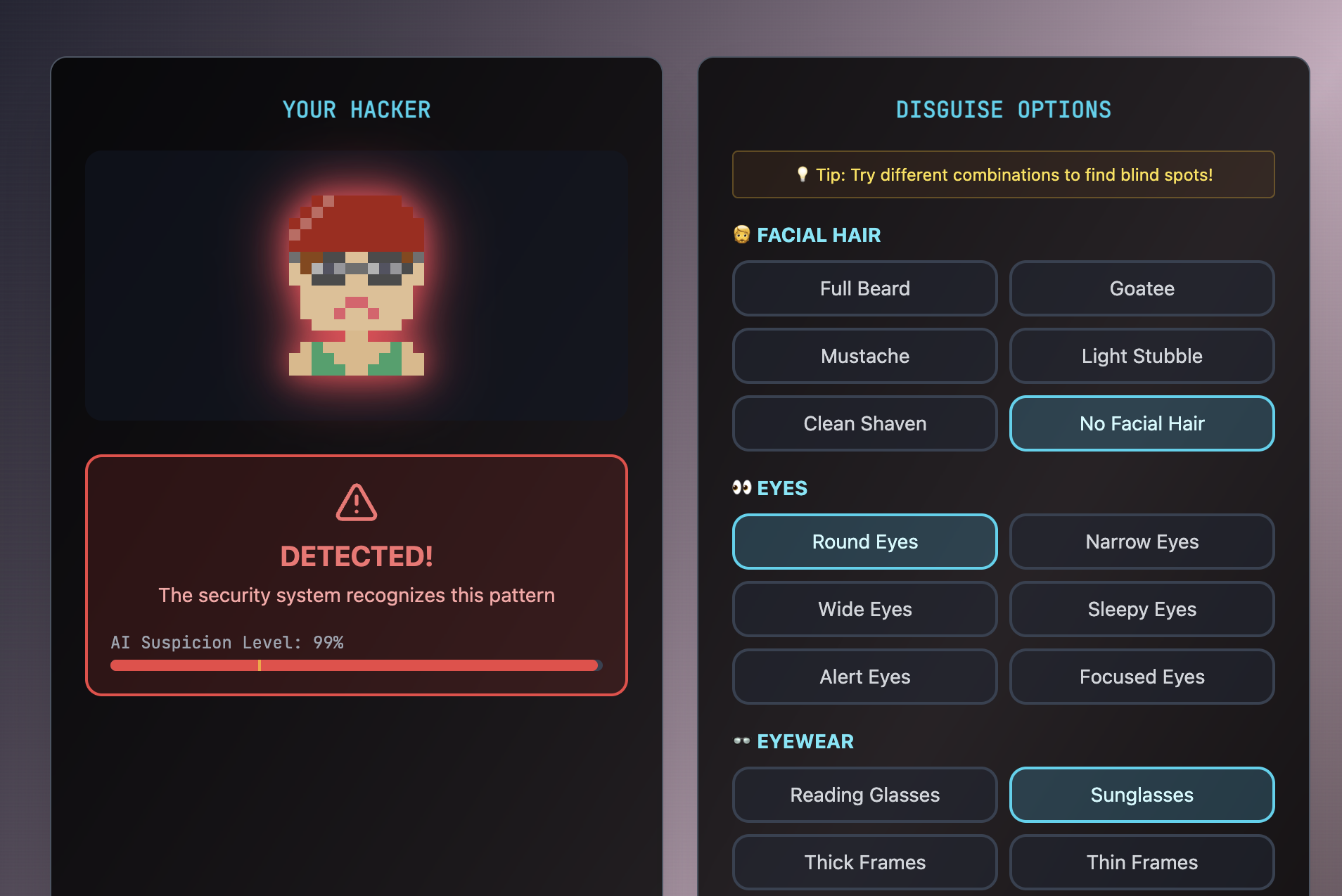

But explaining this to kids required translation. Enter "Who is the Hacker?"—a game that transforms abstract concepts of bias into visceral, playable experiences.

How the Game Works

The game has two roles, each teaching different lessons:

The Security Guard: Training Your Own Bias

Players start as security guards, rapidly sorting through faces to identify who looks "suspicious." With only 2 minutes on the clock, they make split-second decisions—exactly like real security systems do.

What they don't realize initially is that they're training an AI system with every choice. Their snap judgments become the algorithm's worldview.

The Hacker: Exploiting Blind Spots

Then comes the twist. Players switch sides, becoming hackers trying to sneak past the very security system they just trained. They choose the models their friends trained. Suddenly, the mystery doubles.

"The computer thinks everyone with sunglasses and a beard is a hacker!" one student observed, immediately grasping that she could exploit this pattern by choosing different features.

The Beautiful Moment of Recognition

Watching children play this game has been revelatory. Here are actual moments from gameplay sessions:

The Pattern Recognition "Wait, I marked everyone with glasses as suspicious. That's not fair—I wear glasses!"

The Data Bias Discovery "The computer is dumb! It thinks all people with beanies are bad because I showed it three bad guys with beanies!"

The Representation Revelation "I don't have enough people with hoodies in my dataset!"

The Unconscious Becomes Conscious

The most powerful moments come when children recognize their own biases:

- "I didn't mean to, but I kept marking people with glasses as suspicious"

- "I think I was choosing people who look like movie villains"

- "The beanie faces seemed more hackery!"

We also talked about how recommendation algorithms decide based on limited evidence. For example: "This is like when YouTube keeps showing me the same type of videos because I clicked on one cat video."

Beyond Beanies and Sunglasses: Real-World Connections

While kids initially laugh at the obvious patterns ("Beanies = Hackers"), deeper conversations emerged like, "Why someone always gets 'randomly' selected at airports?", a question that probed into profiling.

Teaching Moments That Emerged

1. Bias Isn't Always Intentional

Kids quickly learn that they didn't mean to create unfair systems—it just happened through rushed decisions and limited examples.

2. Data Diversity Matters

When one group consciously tried to include diverse characteristics in their "suspicious" and "safe" categories, their AI performed more fairly.

3. Speed Pressures Increase Bias

The time limit forces snap decisions, mimicking real-world scenarios where bias thrives under pressure.

4. Algorithms Amplify Human Decisions

"The computer is just copying me, but worse!" perfectly captures how algorithms can amplify human biases.

The Gender Shades Legacy in Action

This game translates Joy Buolamwini's groundbreaking research into experiential learning. Just as Gender Shades revealed bias in facial recognition, "Who is the Hacker" reveals bias in decision-making. But more importantly, it empowers kids to:

- Recognize bias in systems they interact with daily

- Understand how bias gets encoded into technology

- Question the fairness of algorithmic decisions

- Imagine more equitable alternatives

Building Better Builders

My hope is that students will now ask 'Who trained this?' whenever we talk about AI. They can probe technology rather than be passive consumers.

This critical lens is exactly what we need. As these children grow up to potentially build the next generation of AI systems, they carry with them the visceral memory of creating biased systems—and the knowledge of how to do better.

The Conversations That Follow

The game is just the beginning. It opens doors to discussions about:

- Why do we have the biases we do?

- How do movies and media shape our idea of what a "hacker" looks like?

- What would a fair security system look like?

- Who gets to decide what's "normal" or "suspicious"?

A Challenge to Adults

Ironically, adults often struggle more with the game than children. We're more entrenched in our biases, less willing to see them as malleable. Children approach it as play, making them more open to recognizing and challenging their assumptions.

Moving Forward: The Next Generation of AI Critics

As AI systems become more prevalent in our lives—from school admissions to job applications to criminal justice—we need a generation that instinctively questions: "Who trained this system? What biases might it have? Who might it harm?"

"Who is the Hacker" doesn't just teach kids about bias; it develops their intuition for fairness, their skepticism of black-box systems, and their imagination for more equitable alternatives.

The Joy in Discovery

Perhaps the most beautiful aspect of this project is watching children's joy in discovery. Unlike adults who often feel defensive when confronting their biases, kids treat it as a puzzle to solve, a pattern to understand, a system to hack.

"Can we play again? I want to train a fair AI this time!" is a common refrain—and perhaps the most hopeful response we could ask for.

Try It Yourself

The game is freely available at saranyan.com/kls/who-is-the-hacker. Play it with your kids, your students, or by yourself. Pay attention to your patterns. Question your quick decisions. And remember: every time we train an AI, we're encoding our view of the world.

The question isn't whether our AI systems will have biases—it's whether we'll recognize them, acknowledge them, and work to correct them. And thanks to a new generation of bias-aware kids, I'm hopeful about our algorithmic future.

Inspired by Joy Buolamwini's Gender Shades and the Algorithmic Justice League. Built with the belief that understanding bias through play is the first step toward algorithmic justice.

Special thanks to the students who played, questioned, and taught us all something about fairness in the process.

Join the Discussion

Share your thoughtsJoin the discussion

I look forward to hearing your thoughts! Share your perspective, ask questions, or add to the conversation.